The assertion that "the opposite direction of the gradient is the direction of steepest descent" is frequently used to explain the principle of gradient descent (SGD). However, this statement is conditional: for instance, mathematically, a "direction" is a unit vector that depends on the definition of the "norm (magnitude)," and different norms yield different conclusions. In fact, Muon effectively changes the spectral norm for matrix parameters, thereby obtaining a new descent direction. Similarly, when we transition from unconstrained to constrained optimization, the direction of steepest descent may not necessarily be opposite to the gradient.

To address this, in this article, we inaugurate a new series that takes "constraints" as its central theme, re-examining the proposition of "steepest descent" and investigating what constitutes the "direction of fastest descent" under varying conditions.

Optimization Principle#

As the first installment, we commence with SGD to comprehend the mathematical meaning behind the statement "the opposite direction of the gradient is the direction of steepest descent," then apply this understanding to optimization on hyperspheres. Before proceeding, however, the author wishes to revisit the "Least Action Principle" for optimizers introduced in "Muon Sequel: Why Did We Choose to Experiment with Muon?"

This principle attempts to answer "what constitutes a good optimizer." Primarily, we desire models to converge as rapidly as possible; however, due to the inherent complexity of neural networks, overly large steps can destabilize training. Therefore, an effective optimizer should be both stable and fast, ideally significantly reducing loss without extensive model modifications. Mathematically, this is expressed as:

Here, $\mathcal{L}$ is the loss function, $\boldsymbol{w}\in\mathbb{R}^n$ is the parameter vector, $\Delta \boldsymbol{w}$ is the update vector, and $\rho(\Delta\boldsymbol{w})$ is some measure of the magnitude of $\Delta\boldsymbol{w}$. The objective is intuitive: within the constraint that the "step size" does not exceed $\eta$ (ensuring stability), we seek the update that maximizes the reduction in the loss function (ensuring speed). This constitutes the mathematical meaning of both the "Least Action Principle" and "steepest descent."

Problem Transformation#

Assuming $\eta$ is sufficiently small, $\Delta\boldsymbol{w}$ is also sufficiently small such that first-order approximations remain accurate. We can then replace $\mathcal{L}(\boldsymbol{w} +\Delta\boldsymbol{w})$ with $\mathcal{L}(\boldsymbol{w}) + \langle\boldsymbol{g},\Delta\boldsymbol{w}\rangle$, where $\boldsymbol{g} = \nabla_{\boldsymbol{w}}\mathcal{L}(\boldsymbol{w})$, yielding the equivalent objective:

This simplification reduces the optimization objective to a linear function of $\Delta \boldsymbol{w}$, lowering the difficulty of solution. Further, we set $\Delta \boldsymbol{w} = -\kappa \boldsymbol{\varphi}$, where $\rho(\boldsymbol{\varphi})=1$, making the objective equivalent to:

Assuming we can find at least one feasible $\boldsymbol{\varphi}$ such that $\langle\boldsymbol{g},\boldsymbol{\varphi}\rangle\geq 0$, then $\max\limits_{\kappa\in[0,\eta]} \kappa\langle\boldsymbol{g},\boldsymbol{\varphi}\rangle = \eta\langle\boldsymbol{g},\boldsymbol{\varphi}\rangle$, meaning the optimization over $\kappa$ can be solved in advance, yielding $\kappa=\eta$. This ultimately reduces to optimization over $\boldsymbol{\varphi}$ alone:

Here, $\boldsymbol{\varphi}$ satisfies the condition that some "magnitude" $\rho(\boldsymbol{\varphi})$ equals 1, so it represents a definition of a "direction vector." Maximizing its inner product with the gradient $\boldsymbol{g}$ corresponds to finding the direction that yields the fastest reduction in loss (i.e., the direction opposite to $\boldsymbol{\varphi}$).

Gradient Descent#

From Equation $\eqref{eq:core}$, we observe that for the "direction of steepest descent," the sole uncertainty lies in the metric $\rho$. This is a fundamental prior (Inductive Bias) within optimizers; different metrics will yield different steepest descent directions. The simplest case is the L2 norm, or Euclidean norm $\rho(\boldsymbol{\varphi})=\Vert \boldsymbol{\varphi} \Vert_2$, which corresponds to our conventional notion of magnitude. Here, we apply the Cauchy-Schwarz inequality:

Equality holds when $\boldsymbol{\varphi} \propto\boldsymbol{g}$. Combined with the unit magnitude condition, we obtain $\boldsymbol{\varphi}=\boldsymbol{g}/\Vert\boldsymbol{g}\Vert_2$, which is precisely the gradient direction. Thus, the statement "the opposite direction of the gradient is the direction of steepest descent" presupposes that the chosen metric is the Euclidean norm. More generally, consider the $p$-norm:

The Cauchy-Schwarz inequality generalizes to Hölder's inequality:

Equality holds when $\boldsymbol{\varphi}^{[p]} \propto\boldsymbol{g}^{[q]}$, leading to the solution:

The optimizer using this as its direction vector is called pbSGD, introduced in "pbSGD: Powered Stochastic Gradient Descent Methods for Accelerated Non-Convex Optimization". It has two special cases: when $p=q=2$, it reduces to SGD; and when $p\to\infty$, $q\to 1$, where $|g_i|^{q/p}\to 1$, the update direction becomes the sign function of the gradient, i.e., SignSGD.

Hypersphere Constraint#

In the preceding discussion, we only imposed constraints on the parameter increment $\Delta\boldsymbol{w}$. Next, we aim to apply constraints to the parameters $\boldsymbol{w}$ themselves. Specifically, we assume the parameters $\boldsymbol{w}$ lie on a unit sphere, and we require that the updated parameters $\boldsymbol{w}+\Delta\boldsymbol{w}$ also remain on the unit sphere (refer to "Hypersphere"). Starting from objective (4), we can formulate the new objective as:

We continue to adhere to the principle that "$\eta$ is sufficiently small for first-order approximations to be adequate." This yields:

Thus, the constraint is approximated by the linear form $\langle \boldsymbol{w}, \boldsymbol{\varphi}\rangle=0$. To solve this constrained optimization, we introduce a Lagrange multiplier $\lambda$:

Equality holds when $\boldsymbol{\varphi}\propto \boldsymbol{g} + \lambda\boldsymbol{w}$. Combined with the constraints $\Vert\boldsymbol{\varphi}\Vert_2=1$, $\langle \boldsymbol{w}, \boldsymbol{\varphi}\rangle=0$, and $\Vert\boldsymbol{w}\Vert_2=1$, we solve for:

Note that now $\Vert\boldsymbol{w}\Vert_2=1$, $\Vert\boldsymbol{\varphi}\Vert_2=1$, and $\boldsymbol{w}$ and $\boldsymbol{\varphi}$ are orthogonal. Consequently, the magnitude of $\boldsymbol{w} - \eta\boldsymbol{\varphi}$ is not precisely 1, but rather $\sqrt{1 + \eta^2}=1 + \eta^2/2 + \cdots$, accurate to $\mathcal{O}(\eta^2)$. This aligns with our earlier assumption that "first-order terms in $\eta$ suffice." If we require the updated parameter magnitude to be strictly 1, we can add a renormalization step to the update rule:

Geometric Interpretation#

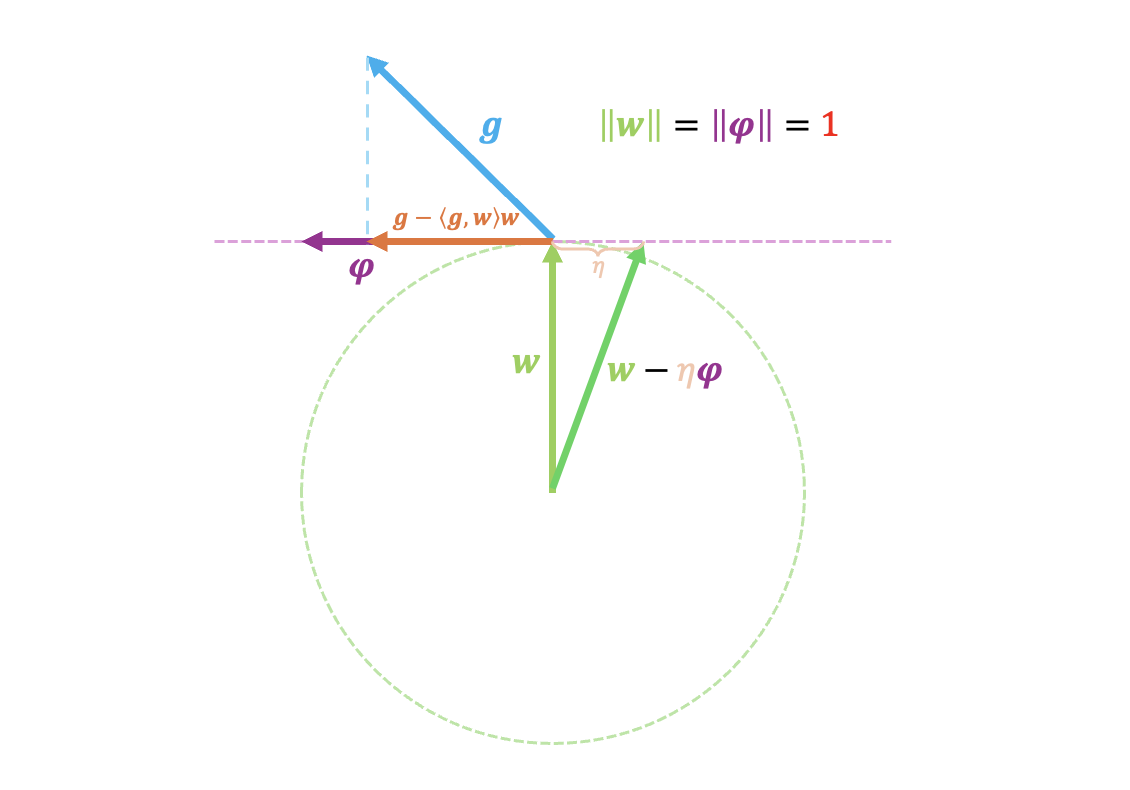

Earlier, through the "first-order approximation suffices" principle, we simplified the nonlinear constraint $\Vert\boldsymbol{w}-\eta\boldsymbol{\varphi}\Vert_2 = 1$ to the linear constraint $\langle \boldsymbol{w}, \boldsymbol{\varphi}\rangle=0$. The latter has a geometric meaning: "orthogonal to $\boldsymbol{w}$." There is a more technical term for this: the "tangent space" of the manifold defined by $\Vert\boldsymbol{w}\Vert_2=1$. The operation $\boldsymbol{g} - \langle \boldsymbol{g}, \boldsymbol{w}\rangle\boldsymbol{w}$ corresponds precisely to projecting the gradient $\boldsymbol{g}$ onto this tangent space.

Thus, we are fortunate: SGD on spheres possesses a very clear geometric interpretation, as illustrated below:

Many readers likely appreciate this geometric perspective—it is indeed aesthetically pleasing. However, it is essential to emphasize: one should prioritize a thorough understanding of the algebraic solution process. Clear geometric interpretations are often a luxury, serendipitous rather than guaranteed; in most cases, the intricate algebraic procedure embodies the underlying essence.

General Results#

Are readers now inclined to generalize this to arbitrary $p$-norms? Let us attempt this together and examine the difficulties encountered. The problem becomes:

The first-order approximation transforms $\Vert\boldsymbol{w}-\eta\boldsymbol{\varphi}\Vert_p = 1$ into $\langle\boldsymbol{w}^{[p-1]},\boldsymbol{\varphi}\rangle = 0$. Introducing a Lagrange multiplier $\lambda$:

The condition for equality is:

Up to this point, no substantive difficulties have arisen. However, we next need to determine $\lambda$ such that $\langle\boldsymbol{w}^{[p-1]},\boldsymbol{\varphi}\rangle = 0$. When $p \neq 2$, this constitutes a complex nonlinear equation with no straightforward analytical solution (although, if solved, we are guaranteed an optimal solution by Hölder's inequality). Therefore, for general $p$, we must halt our analytical pursuit here. When encountering practical instances with $p\neq 2$, we will need to explore numerical solution methods.

Nevertheless, besides $p=2$, we can attempt to solve the limit $p\to\infty$. In this case, $\boldsymbol{\varphi}=\sign(\boldsymbol{g} + \lambda\boldsymbol{w}^{[p-1]})$. The condition $\Vert\boldsymbol{w}\Vert_p=1$ implies that the maximum among $|w_1|,|w_2|,\cdots,|w_n|$ equals 1. If we further assume a unique maximum, then $\boldsymbol{w}^{[p-1]}$ is a one-hot vector: $\pm 1$ at the position of maximum absolute value, and zero elsewhere. Under this assumption, we can solve for $\lambda$, resulting in clipping the gradient at the maximum position to zero.

Summary#

This article inaugurates a new series focusing on optimization problems under "equality constraints," aiming to identify the "direction of steepest descent" for common constraint types. As the first installment, it examines a variant of SGD under the "hypersphere" constraint, establishing foundational results for constrained optimization on manifolds.

Original Article: Su Jianlin. Manifold Gradient Descent: 1. SGD on Hyperspheres. Scientific Spaces.

How to cite this translation:

BibTeX: